When we think of the term “artificial” intelligence, it raises the question of: an artificial version of what? The answer, of course, is an artificial form of human or “natural” intelligence. There are many different definitions of what constitutes human intelligence. Some definitions will include the ability to problem-solve, learn, think, or engage in abstraction. Other definitions of natural intelligence include components of emotional intelligence, creativity, wisdom, or even morality. It is difficult to come to one comprehensive definition of what natural intelligence is. For this reason, it can be equally as challenging to define what “artificial” intelligence is.

With that said, the notion of artificial intelligence is based on a relatively simple premise. As stated by John McCarthy, a pioneer in the field, and his co-authors: “Every aspect of learning or any other feature of intelligence can, in principle, be so precisely described that a machine can be made to simulate

it.”1

To that end, artificial intelligence is often best thought of as a branch of computer science, rather than as a specific technology.

a. The Multiple Definitions of AI

There is no single definition of Artificial Intelligence (AI) that applies in all contexts. It is worth starting, however, with how the IAPP itself defines AI in its document titled

Key Terms for AI Governance:

Artificial intelligence is a broad term used to describe an engineered system that uses various computational techniques to perform or automate tasks. This may include techniques, such as machine learning, in which machines learn from experience, adjusting to new input data and potentially performing tasks previously done by humans. More specifically, it is a field of computer science dedicated to simulating intelligent behavior in computers. It may include automated

decision-making.2

As this definition itself recognizes, AI is a broad term that is subject to many different meanings. It can be defined in terms of the tasks it seeks to perform, or it could be defined in terms of the academic discipline from which it originates.

The European Union’s Artificial Intelligence Regulation—commonly called the E.U. AI Act, which we will cover in much further detail in Section II.C—defines AI from a systems perspective. An

AI System, is defined under the E.U. AI Act as “a machine-based system designed to operate with varying levels of autonomy, that may exhibit adaptiveness after deployment and that, for explicit or implicit objectives, infers, from the input it received, how to generate outputs such as predictions, content, recommendations, or decisions that can influence physical or virtual

environments.”3

The E.U.’s AI Act definition is rather complex. It may be easier to think of the term AI system, as so-defined, as having two primary components: (1) the system must operate at varying levels of autonomy; and (2) it must infer from the input how to generate outputs that can influence physical or virtual environments. As set forth in the Recitals to the E.U. AI Act, this definition is “based on key characteristics . . . that distinguish [A.I.] from simpler traditional software systems or programming

approaches.”4

Therefore, the intent is that this definition does not apply to “systems that are based on the rules defined solely by natural persons to automatically execute operations,” such as typical software

algorithms.5

Accordingly, “[a] key characteristic of AI systems is their capability to

infer.”6

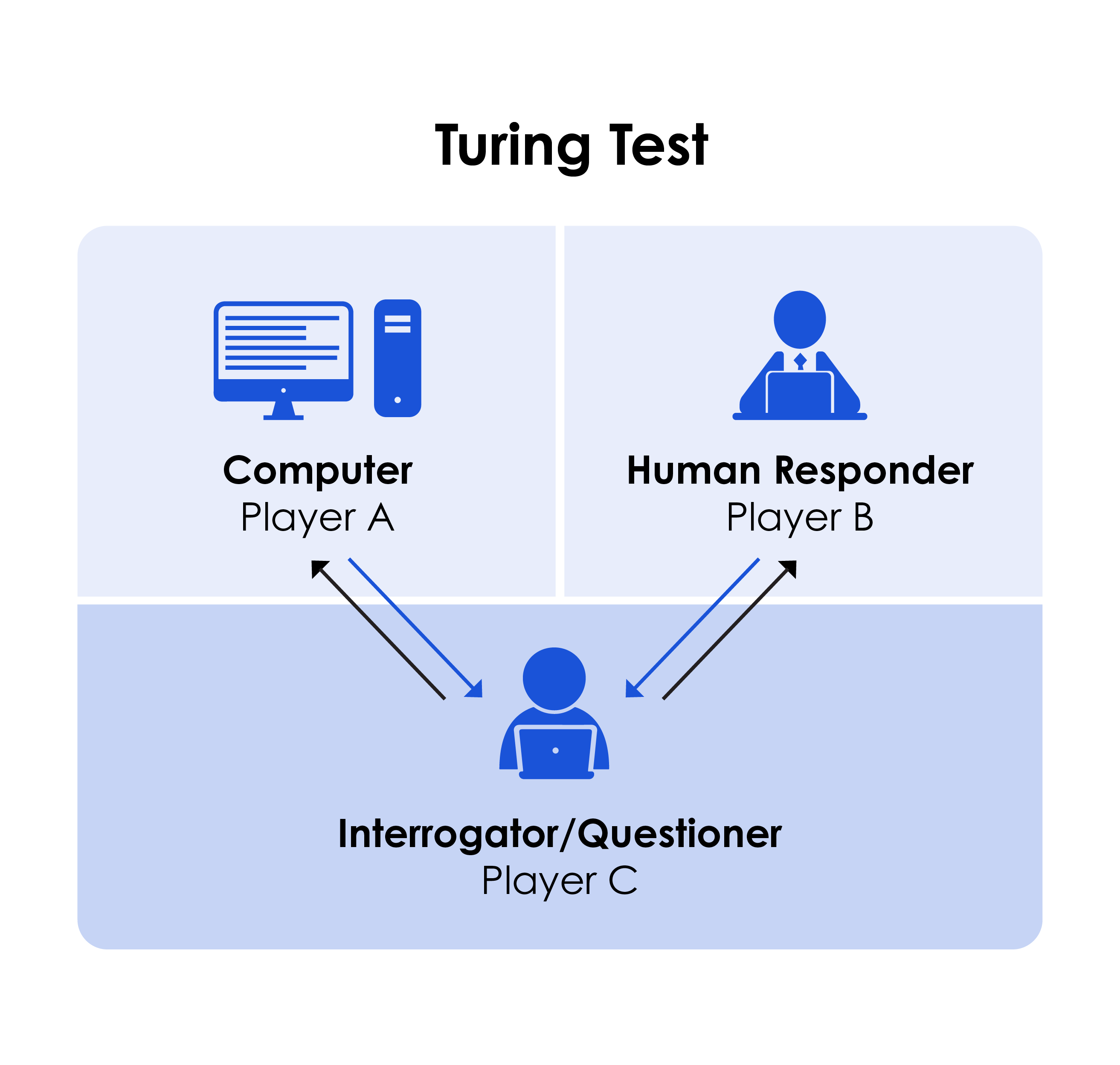

b. The Turing Test

From a conceptual or theoretical perspective, one should be able to properly distinguish between AI and more general software applications by subjecting it to some form of testing. The

Turing Test, named after famed computer scientist Alan Turing, is one means of testing a “machine’s ability to exhibit intelligent behavior equivalent to or indistinguishable from that of a

human.”7

As originally formulated, the Turing Test asked the question whether a human would be able to differentiate between a computer-generated response and a human

response.8

If a human cannot differentiate between the two responses, the computer-generated response would be considered AI. Put differently, AI will be able to trick a human into thinking that it is human.

The Turing Test, developed in 1949, was originally called the “imitation game.” In the game, the evaluator would judge language conversations it had between two separate partners. Initially, this test was limited to written text, but it has since been adopted as a more general test for machine intelligence.

The Turing Test can be thought of itself as another way to define the term artificial intelligence. Is the computer-generated answer distinguishable from a human? If not, then it is AI under this more theoretical definition.

EXPLANATORY NOTE: Many consider the beginning of AI to be Alan Turing’s imitation game. The term “artificial intelligence” was not officially coined, however, until 1956 as part of the Dartmouth Summer Research Project on AI (a/k/a the “Dartmouth Conference”). This project brought together researchers from multiple distinct fields of study to explore the possibilities of intelligent machines.

The development of AI has seen peaks and valleys since the Dartmouth Conference gave birth to the unified field of AI research. These are commonly referred to as AI summers (fast developments) and winters (intense skepticism). Below is a brief timeline:

-

First AI Summer (mid-1950s to mid-1970s)

-

First AI Winter (mid-1970s to mid-1980s)

-

Second AI Summer (mid-1980s to late-1980s)

-

Second AI Winter (late-1980s to late-1990s)

-

Renaissance and the Era of Big Data (late-1990s to 2011)

-

The AI Boom (2011 to Present)

The most recent version of the AIGP Body of Knowledge indicates that the history of AI development is no longer tested on the AIGP exam. Having some basic background in the history of AI, however, is helpful for understanding its development and what it is from a conceptual perspective. Therefore, we have continued to include this information here.

c. Common Elements

There are several key elements that form the basis for nearly all definitions of AI. These elements help differentiate AI from more simplistic, traditional software systems. These elements include: (1) technology; (2) automation; (3) human involvement; and (4) the expected output.

The exact contours of each of these four elements is what varies from one definition to the next. Under most definitions, the technology component will usually be defined in terms of an engineered or machine-based system, or as a logic, knowledge or learning algorithm. Elements of varying levels of automation will be included in a definition of AI, but any definition will make clear that it can act on its own or respond dynamically to inputs or the environment. The role of humans is typically defined in terms of either setting the objectives of the system or in providing the input or data to the system. However, human involvement could also include the training of the system involved. The output of an AI system is commonly defined to include content, predictions, recommendations, or decisions.

Consider the E.U. AI Act’s definition of “AI systems” set forth above. It can be broken down according to these four elements as follows:

-

Technology: A “machine-based system”

-

Automation: “[D]esigned to operate with varying levels of autonomy”

-

Human Involvement: “[E]xhibit[s] adaptiveness after deployment and that, for explicit or implicit objectives”

-

Expected Output: “[I]nfers, from the input it received, how to generate outputs such as predictions, content, recommendations, or decisions that can influence physical or virtual environments”

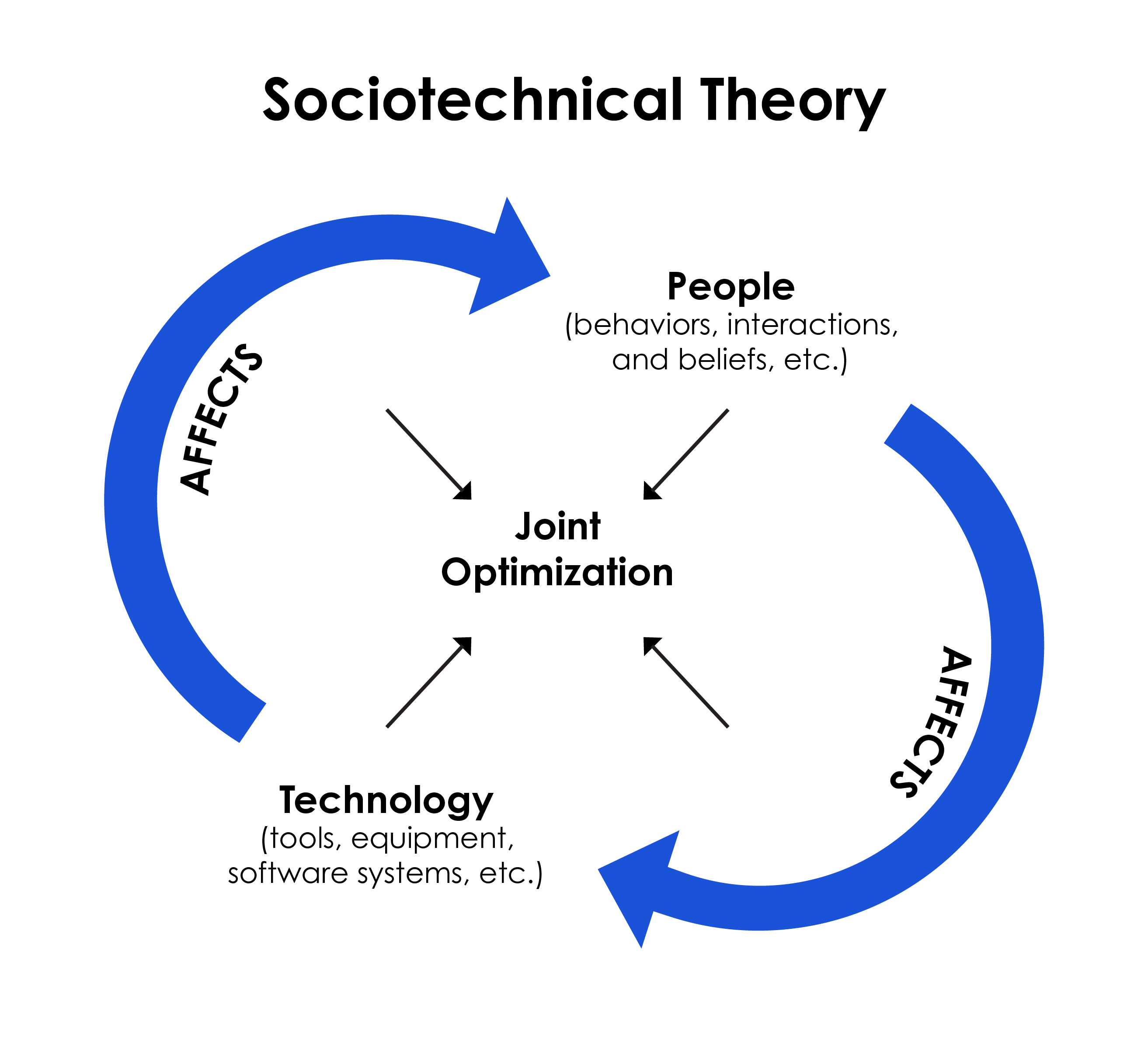

d. AI as a Socio-Technical System

As a final point, it is worth noting that AI is an inherently

Socio-Technical System, which is to say that its use involves interactions between people and technology, each of which helps shape the

other.9

Put differently, we as humans help shape technology. Yet, at the same time, technology also shapes us as a society. A socio-technical system refers to this cycle.

Because of this cycle, it is important to consider all relevant stakeholders in developing, designing, deploying, or imposing governance on AI systems. Bringing in stakeholders that can understand the impacts an AI system has on society is key. By way of example, AI systems should be developed with a cross-functional team that calls upon social sciences, user experience (UX) designers, and others. Development of AI should not be left solely to computer scientists and software engineers that do the actual building; rather, a holistic approach that can anticipate downstream impacts should be used to help shape AI development. We will return to this point later in

Module I.C.3.